I know, I know. I don’t speak about interoperability much, and ya’ll know what I think of IFC, but a recent discussion prompted me to submit to you a more articulated argument around the issue, because I do not think, in fact, that IFC is the problem. It’s people.

Surprise, surprise.

1. The Mirage of “Solving” Interoperability

1.1. A.k.a. Why file formats alone haven’t fixed anything

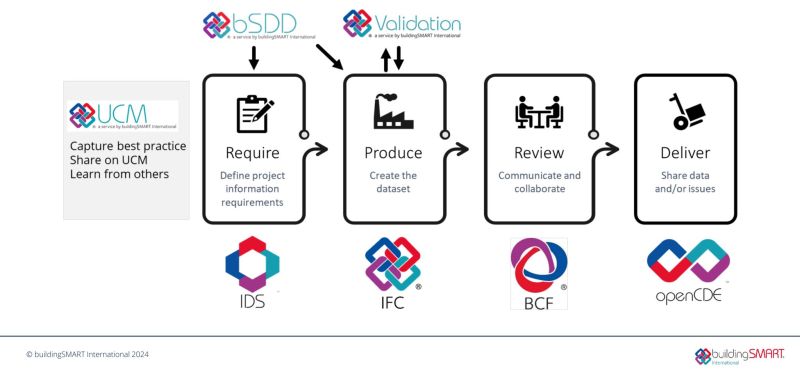

In the world of digital construction, interoperability is often viewed as a purely technical hurdle, a problem of file formats, plug-ins to untangle property sets, and One API to Rule Them All. The prevailing belief is that if only we could agree on a common data structure, standard or platform, the friction between disciplines would vanish. The industry has responded with a proliferation of interoperability solutions around structuring data: IFC and MVD, COBie, BCF, and more, each promising to act as a universal translator between software environments. The Babel Fish of BIM, if you will, without the same glorious result.

“Now it is such a bizarrely improbable coincidence that something so mind-bogglingly useful could have evolved purely by chance that some thinkers have chosen to see it as a final and clinching proof of the non-existence of God.”

Yet despite decades of standardisation efforts and the rise of so-called open formats, the actual experience on projects tells a different story. Teams still struggle to exchange information reliably. Models arrive late or incomplete. Coordination platforms become bottlenecks instead of bridges. And, perhaps most tellingly, the same questions resurface on every job: Which version of the model is this? Can I trust it? Who touched it last? Even when the IFC file arrives from someone who brags to have mastered it — trust me — the confusion remains.

1.2. Why’s that?

The promise of interoperability through standardised data schemes like IFC rests on a critical assumption: that data structure alone can guarantee shared understanding. But digital construction is not a purely computational endeavour. It is a fundamentally collaborative act between disciplines, each with its own set of tools, priorities, and mental models.

An IFC file may technically “contain” all the right objects — a beam, a wall, a door — but it doesn’t carry with it the context that explains why something was modelled in a certain way. It doesn’t resolve misalignments in naming conventions, object definition levels, or expectations for what constitutes a “complete” deliverable. The assumption that interoperability is achieved at the point of file exchange ignores the reality that each stakeholder reads that file through the lens of their own discipline and risk exposure.

This is not a failure of technology. It’s a mismatch between what the tools are designed to do and how people actually work together.

Our current solution? We document the shit out of our digital models. Which, as Agile teaches us, rarely works.

1.3. Interoperability as a sociotechnical challenge

True interoperability requires more than technical compliance and documentation — it demands cultural alignment. This means recognising that every discipline in a project team brings not just different software, but different assumptions, languages, and incentives. An architect may focus on geometry and intent; an engineer on performance and tolerances; a contractor on sequencing and risk mitigation. These aren’t just different ways of using data — they’re different ways of thinking through the model.

Interoperability, then, is not only a problem of digital infrastructure but of social infrastructure. It’s about building mutual trust, shared language, and coordinated workflows across professional boundaries. And it’s about redesigning the legal, procedural, and contractual frameworks that currently discourage meaningful collaboration, from liability fears to the rightful ban on suggesting an edit on someone else’s model.

The real barrier isn’t whether your software exports in IFC 4, and you know how to pSet like a pro. It’s whether your team can have the right conversation at the right time about what information matters, to whom, and why.

2. The Tool Paradox: Different Roles, Different Needs

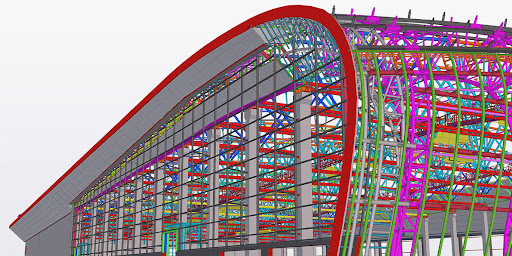

Given digital construction as a multidisciplinary ecosystem, diversity is both its strength and its weakness, as it might happen in any natural environment. The more complex an ecosystem, in fact, the more interconnected its parts and the more unpredictable the downfall. From early design to site coordination to long-term operation, each actor in the building lifecycle relies on digital tools tailored to their specific objectives, yet this diversity often clashes with the ideal of seamless interoperability. When we talk about using “a common data environment,” what we mean in practice is trying to harmonise different technological solutions and, most importantly, to fit a dozen incompatible processes into a single framework. The result often isn’t harmony. It’s a forced compromise. Let’s see how and why.

2.1. Design, Construction, Operation: Diverging Digital Ecosystems

The needs of an architect during concept design are worlds apart from those of a contractor managing sequencing or a facility manager planning long-term asset maintenance (if you’ve ever seen one, please let me know). Each role operates within a different temporal window and a different definition of precision.

- Design teams prioritise spatial logic, visual intent, and formal exploration. Hence, they need freedom, fluid geometry, and tools that support iteration, not rigid structure.

- Contractors focus on buildability, quantities, and phasing. Hence, they care about clash detection, scheduling integration, and constructible detail, not conceptual schemes.

- Facility managers look for structured, persistent, queryable data tied to long-term performance. Hence, they need reliable metadata, not rapid visual prototypes.

These divergent priorities generate equally divergent digital ecosystems. The software stack that empowers a designer (e.g., Rhino + Grasshopper + Dynamo + Revit) is often incompatible with the one used by the contractor (e.g., Navisworks or Synchro), let alone the CAFM system running post-handover. Even when data can be exchanged, the way it is interpreted and used is fundamentally shaped by discipline-specific goals and workflows.

2.2. There’s no One Tool to Rule Them All

The construction industry has long flirted with the dream of a universal platform — a single software that could serve the entire building lifecycle. Autodesk is always pushing for Revit to be a software for all seasons, for instance. BIM, as a concept, often carries this implicit promise. In reality, this vision breaks down as soon as specialised demands come into play. What’s charming is that it breaks even if they all use the same software.

This has to do with the strategy enacted through the software of choice into the information model. No single data structure can simultaneously optimise for design fluidity, construction logistics, and asset lifecycle management without either becoming impossibly complex or diluting its value to each discipline to the point of irrelevance.

Attempts at unified data structures frequently lead to frustration: tools that try to do everything tend to do nothing particularly well and — more dangerously — they encourage the illusion of collaboration while masking the persistence of silos. Just because multiple stakeholders use the same technological solution or data structure, it doesn’t mean they’re aligned in purpose, process, or expectations.

2.3. From Flexibility to Fragmentation: A Trade-off

This landscape creates a paradox. The freedom to choose the best tool for the job — essential for productivity and quality — inevitably fragments the digital environment. Every export, conversion, or workaround introduces friction and the potential for error. As a result, interoperability isn’t just a technical function. It becomes a negotiation of intent across fragmented contexts.

This is where the cultural dimension resurfaces. If teams see software as territorial — “my model,” “your file,” “their platform” — then every tool boundary becomes a political border. Instead of asking how we can share data, we end up debating whether we should. Protectionism replaces cooperation. Innovation slows down.

Real interoperability doesn’t require uniformity. It requires mutual respect for the diversity of tools and the roles they serve — and a willingness to build bridges that preserve context, not just single attributes.

3. IDS anyone?

This, in theory, is where Model View Definitions came into play. A Model View Definition (MVD) is a specification that defines a subset of the full IFC schema tailored to a particular use case or workflow within the building lifecycle, which sounds an awful lot like what we need.

3.1. What It Does

If IFC is a rich data schema — designed to represent virtually every building element and piece of metadata imaginable — most tools and project teams don’t need or can’t handle all of its capabilities at once. That’s where the MVD comes in. An MVD queries and structures the IFC data to ensure that:

- only the relevant entities, properties, and relationships for a specific purpose are included;

- the exported model can be reliably imported, interpreted, and used by tools aligned to that purpose.

Some practical examples include:

- Coordination View: designed for geometric coordination between design disciplines, a.k.a. your run-of-the-mill Clash Detection;

- Design Transfer View: for handing off design data to other stakeholders who might be wondering what the hell it is that you’re doing with those pillars anyway;

- Reference View: for sharing geometry with clear visual fidelity, for people who might have to design walls around your columns.

In short:

The MVD is the instruction manual that tells the software which parts of the IFC schema to use, for what purpose, and how.

3.2. Why it Matters and Why it Fails

Even when two tools “support IFC,” they may support different MVDs… which explains why a model exported from one application might appear incomplete or garbled in another. The MVD essentially defines how interoperability is supposed to work in a given context. But if the wrong MVD is chosen — or misunderstood — the result is often frustration and data loss.

Also, consider the picture with the voxels. What happens when the subset that’s supposedly included in the MVD is not aligned with the scope, intent, and approach of the party that is developing the original model in the first place?

One of the problems is believed to be that MVD is a query. It only happens when you export the model, as many people don’t use attributes in the property set to achieve their model uses, but they just map them when exporting.

Enters IDS. The new Information Delivery Specifications promise to solve all that, as they aren’t specifications for querying the model at the end of the process: their promise is to implement a mask in the authoring tools, so that people will be warned of the data disalignments between their model and the standard data scheme as they model along.

In other words, you won’t know your Ifc sucks only after you’ve exported it – and only if you bother to check – but you could be warned step by step, as you model. Which would tremendously help, of course, as it aligns with the Agile principle of shifting quality control towards production. And it can be customised project by project. Which could be a disaster, but that’s a conversation for another time.

But will people actually bother?

4. Misaligned Mindsets: Culture Eats Data for Breakfast

Peter Drucker’s famous adage — “Culture eats strategy for breakfast” — rings just as true in the world of digital construction. In an industry increasingly governed by models, standards, and protocols, it’s easy to assume that a structured BIM Execution Plan or a well-formed IFC export guarantees collaboration. But beneath the surface of compliance lies a deeper, more intractable problem: the human factor.

No matter how interoperable the file format, it’s the culture of the project team — the shared assumptions, behaviours, and incentives — that determines whether data will flow or stall.

4.1. Protectionism, Mistrust, and “Black Box” Workflows

In practice, many project actors operate in a mode of defensive collaboration. Rather than sharing information freely, teams tend to limit what they expose, protect their intellectual effort, and offload liability. Models become sealed containers — outputs, not shared resources. The result is a “black box” workflow, where each stakeholder receives a file, transforms it within their proprietary environment, and passes it along, without transparency or feedback.

This isn’t always malicious. Sometimes it’s about risk: who’s accountable if the model is wrong? Sometimes it’s about effort: why spend time preparing clean exports when the recipient “should have the same software”? And sometimes it’s just about habit: the inertia of siloed work inherited from CAD-era processes.

Yet, regardless of intent, the effect is the same: interoperability is reduced to transactional file exchanges. Trust is outsourced to the format, instead of being built between people.

4.2. Collaboration Stops at File Export (because contracts do)

One of the most common misconceptions in digital project delivery is that exporting an IFC is the final step in collaboration. “We delivered the data.” But data without context, explanation, or dialogue is just noise. And in many projects, that’s exactly what happens.

A federated model may be hosted on a technological solution in the Common Data Environment, but if no one agrees on update cycles integrated with element responsibilities that match the progress of the project, the result is not clarity, it’s confusion. At a certain point in construction, portions of those files will have to be handed over to different subcontractors, but no one will know how to do it because the data structure still reflects the design intent of the previous stage. In these cases, the appearance of interoperability masks the absence of real cooperation. And the root issue isn’t technical capacity, even if IFC isn’t the simplest thing to master. It’s the absence of shared understanding, mutual trust, and aligned expectations — in other words, culture.

Case Example: The Broken Handoff in a BIM Coordination Process

Consider this real-world scenario from a multidisciplinary hospital project:

- the architectural team works in a parametric environment, modelling complex geometry using custom families and a grouping strategy that works for them (even if I hate groups… boy do I hate groups);

- the MEP engineers, on a tight schedule, rely on standard IFC imports into their discipline-specific software to coordinate the piping layout;

- the project has a weekly coordination meeting, with a shared glossary of naming conventions and property sets that serve the purpose of delivering a final model for maintenance.

During one cycle, the architect exports a new version of the model in IFC, intending to show major updates in the surgical suite layout. But the MEP team opens the model and has no way of quickly identifying what changed. Their only option is to check spot by spot, duct by duct, if their work still has meaning. Many ducts appear misplaced or duplicated. Coordination grinds to a halt.

The problem?

Even if pSets were agreed and respected, there was no shared strategy on the here and now, on what actually mattered to the people involved in the process. The export was technically compliant. The data was “there.” But no one had discussed how changes would be interpreted, or how dependencies were being tracked, the MEP team wanted the architect to revert to PDFs with revision clouds instead of a seamless, model-based collaboration, and BIM risked being dropped altogether. The model exchange process had been devoid of its crucial element: trust. How we solved it, is a story for another time. What matters here is that interoperability had technically been accomplished, but culturally it had failed.

Outside the Construction Industry: when Interoperability meant Cultural Change

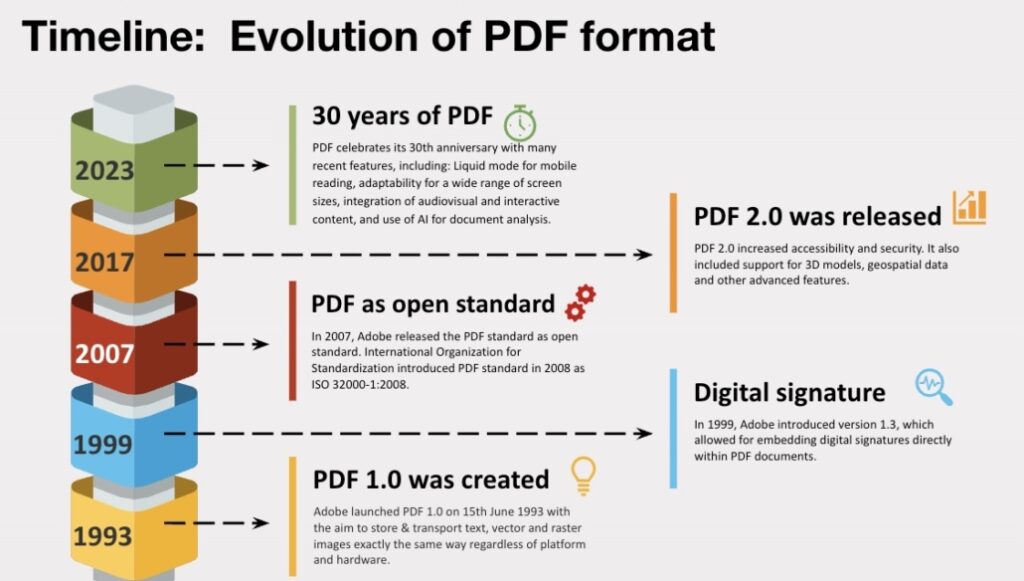

If construction wants to overcome its interoperability crisis, it must look beyond its own boundaries. As we often do, when, let’s seek inspiration outside the Construction Industry, because I often see IFC compared to PDFs and I’m not really sure we know what we’re talking about when we do that. Yes, because the PDF, as open as it might look, is a proprietary format. And the two things aren’t in contradiction.

Other industries — some with even more complex data landscapes — have faced similar challenges and responded not with perfect tools, but with collective agreements and shared principles that were promoted, in many cases, by the very same companies manufacturing software. That’s also how IFC started, back in the day. In each successful case, however, true interoperability was only achieved after the industry accepted that the problem wasn’t just the file format: it was the fragmentation of expectations.

PDFs and the Publishing Industry: Standardisation Through Consensus

The PDF (which stands for Portable Document Format, in case you didn’t know) is often cited as a success story of digital interoperability. It wasn’t the most advanced format when it launched. It didn’t offer editing, modular data, or real-time bananas. What it did offer was predictability.

The publishing industry — from graphic designers to print houses — adopted PDF not because it was imposed, but because it was badly needed to solve a common frustration: inconsistent rendering of fonts, layouts, and image placement across different devices and platforms. PDF worked because the industry agreed that the final output mattered to everyone. That cultural consensus enabled the development of stable workflows, cross-platform reliability, and trust in the “frozen” nature of content.

In construction, we don’t have that kind of shared consensus on what models mean at each stage, and we don’t seem to have the same level of awareness regarding how much it means that the output stays consistent after our contract is done. Also, there’s a problem of workflow. We expect deliverables to remain editable — because the work to be done would be too much if you had to start from scratch at every stage — but also reliable; flexible, but also traceable. The success of PDF lies in limiting interpretation. The AEC industry hasn’t picked a direction yet.

Excel’s XML Transformation: Embracing Modularity

“But the PDF is just one example,” you might be thinking. You want another example? I’ll give you another example.

Microsoft Excel made a radical move in the early 2000s when it began introducing XML-based formats with Office XP (Excel 2002) and Office 2003. They didn’t make much noise, as they were limited in scope. The actual revolution arrived with Office 2007 (released in late 2006/early 2007), when it adopted the Office Open XML (OOXML) format. This turned what had been a proprietary, unbreakable binary file into a structured archive of modular data — spreadsheets, styles, macros, and metadata, all exposed in readable XML components. OOXML was standardised as ECMA-376 in December 2006, concurrent with Microsoft’s move, and later as ISO/IEC 29500: 2008.

The shift wasn’t about creating a “universal spreadsheet”. It was about enabling interoperability through accessibility: other applications could now parse, extract, and reuse specific data layers without needing to reverse-engineer the entire file. That opened the door to custom applications, integrations, and a wider ecosystem of tools that could speak Excel without being Excel.

The reasons for Microsoft to pursue an open strategy were several and interrelated; the change was equally driven by technological trends, customer demands, industry pressures, and regulatory expectations. For starters, adopting an XML-based file format enabled a wide array of tools and platforms to create, read, and write Office documents, thus spreading the format beyond native adopters. This fostered interoperability across different systems and applications, allowing for new levels of integration with line-of-business software and custom workflows rather than putting pressure on Microsoft to deliver all possible solutions for the multiple array of problems the software was used to tackle. In other words, the open format made it easier for developers to programmatically access and manipulate document content without relying on proprietary Office automation, broadening the ecosystem of third-party solutions and integrations.

There was also significant demand from public institutions, governments, and enterprise customers for open, standardised formats to ensure long-term access, digital preservation, and archival of documents. Governments and large organisations increasingly required open standards for procurement and compliance reasons, making openness a competitive necessity for Microsoft: the alternative was losing that chunk of the market altogether.

XML-based formats also brought several technical benefits: smaller file sizes due to ZIP compression, easier recovery from corruption, improved ability to locate and parse content, alongside the obvious ability to open and edit files with any XML editor.

In construction, some of these pressures exist, but they come from an array of different, fragmented voices. On one side, procurement needs open data schemes for the very same reasons Microsoft was pushed to go XML-based, but they aren’t the ones producing files: they’re just at the recipient’s end. On the other side, the suppliers of those files have no real benefit in actually supporting an open format because they receive none of the benefits that OOXML gave to operators. Data isn’t easier to access. IFC doesn’t make it simpler to craft new solutions. On the contrary, interoperability in the Construction Industry often suffers from the opposite approach: models bound into a single, monolithic, aggregated monster, exported as a single .ifc file, in which geometry and metadata are locked together but don’t really talk to each other anymore through a parametric strategy.

Excel’s lesson is that modularity enables specialisation without sacrificing integration, and it’s a lesson in business strategy: what trade-off are we offering professionals to “go open”, aside from a moral imperative when it comes to public procurement?

Aerospace and Automotive: Long-Term Collaboration Through Contractual Openness

One last topic to tackle is the contractual one, and let me do it through another example from an industry that’s simultaneously very close and very far from construction, especially when it comes to digitalisation.

Sectors like aerospace and automotive are frequently held up as models of digital maturity. You’ve heard it all before. But my theory is that their success in interoperability doesn’t come from better file formats alone. It comes from contractual clarity and cultural buy-in.

Can you read my file?

When will you receive it?

How will you use it?

Who updates what?

How do we manage change?

These are all questions the construction industry asks after they signed a contract, because a contract is A Very Serious Business, and people doing BIM are just technicians who shouldn’t be present when the Adults are talking. Except people in those rooms often can’t oppose their thumbs.

Until those questions are embedded in contracts, governance models, and software pipelines, not left to informal interpretation or follow-up discussions, BIM will always be a pretty model to inflate the ego of people who consider themselves artists and couldn’t lay one brick on top of the other. In the automotive industry, data flows because the relationships are engineered to support it. Construction, by contrast, often relies on short-term, phase-based and project-based engagements with limited continuity. Teams assemble and disband quickly, with little incentive to build long-term alignment. In this environment, interoperability becomes a recurring burden, not a long-term investment.

The story so far…

Technical interoperability only succeeds when cultural interoperability comes first. Consensus, modular thinking, and contractual alignment aren’t “soft” issues: they’re the foundation for data-driven collaboration.

Where can we go from here?

The construction industry has spent decades trying to solve interoperability by perfecting data schemes, certifying software for compliance, and mandating standards. Yet, as we’ve seen, no scheme can compensate for a lack of shared understanding. Interoperability is not a feature: it’s a relationship.

If we want digital collaboration to work in AEC, we need to stop enforcing formats from the top down, drop the bullshit, and start co-creating protocols from the ground up. That means reshaping how we organise, contract, and communicate, not just how we export files.

Here are three practical shifts that could move us in that direction.

1. Establish “Working Agreements” Before Models Are Exchanged

Borrowing from agile methodologies and cross-disciplinary industries, teams can create pre-modelling collaboration protocols, lightweight, living documents that define:

- What data each party needs (not just what the software can produce);

- Who is responsible for authoring, reviewing, and updating which components (and not just who’s responsible for authoring them);

- What naming conventions, LODs, and property sets will be used (naturally);

- What constitutes a “deliverable”, not just in geometry, but in meaning.

In theory, it should be what we do with a BIM execution plan, but too often that’s a document that gets drafted by a single hand in isolation. We should get rid of the bureaucratic approach, and avoid drafting documents as contractual appendices written to mitigate blame. These should be working agreements created by practitioners for practitioners — the social contracts of collaboration.

In practice: during project kickoff, schedule a dedicated “data expectations workshop” involving not only BIM coordinators but also project leads, site managers, and FM representatives. The goal is to map who uses what, when, and why.

2. Modularise Data Exchanges Around Use Cases

Instead of exporting “everything for everyone” through monolithic IFC files, embrace and adopt the modular approach to data exchange provided by MVDs — one that mirrors Excel’s XML evolution and the structured discipline of aerospace data environments.

For each use case (e.g. quantity take-off, fire strategy review, space management), define:

- a limited, consistent data scope: what elements and attributes are needed?

- A clear formatting convention;

- A named “data package” owner in the Scrum sense of the term, responsible for curation and traceability.

This should encourage clarity and reuse while avoiding overload and misinterpretation. It also fosters a culture of data stewardship rather than blind delivery.

In practice: consider structuring your Common Data Environment solution not by discipline but by exchange intent: coordination, procurement, maintenance, etc. This reorients collaboration around purpose, not unshared responsibility.

3. Embed Interoperability into Contracts as a Shared Obligation

As seen in aerospace and automotive, legal clarity and alignment over time are critical to effective collaboration. In construction, interoperability is often framed as a deliverable rather than a shared practice, as an obligation for the supplier instead of a common good to be grown and harvested for everyone’s benefit.

Instead, we need to embed interoperability as a reciprocal obligation in project contracts — not just saying “export in IFC 2×3” or “use this pSet,” but agreeing on:

- what standards will be used and why;

- what versioning and issue management rules apply;

- who has the right to edit, overwrite, or annotate third-party models;

- how disputes over model interpretation or “broken data” are resolved.

This doesn’t mean making contracts longer — it means making them smarter. Think less about protecting yourself from failure and more about designing collaboration for success.

In practice: use pre-construction contracting workshops (e.g. early contractor involvement or IPD-like settings) to agree on interoperability protocols before authoring begins — and revisit them during the project as needs evolve. For fuck’s sake, involve a lawyer who understands or is willing to learn the technical aspects. They exist. They’re out there. I promise you.

Conclusion: from Data Exchange to Shared Intent

The real goal of interoperability is not clean geometry or perfectly mapped properties — it’s shared intent. It’s knowing that when you send a model, the recipient understands why you made the choices you did, how they’re meant to interpret it, and what decisions it supports downstream.

I don’t give a flying fuck about IFC. That shift requires more than standards. It requires empathy, clarity, and mutual investment. It means moving from a mindset of “how do I protect my work” to “how do we progress the project together.” And perhaps most importantly, it means accepting that interoperability is not something you enforce — it’s something people practice because they have an actual benefit.

No Comments